前后弄了一个多月,总算弄出来了Docker + OpenvSwitch + dpdk的VNF实验环境。

可用版本

- Ubuntu kernel版本 4.4.0-131-generic

- ubuntu系统版本16.04.5

- dpdk版本16.11.1

- openvswitch版本2.6.1

- pktgen版本3.1.1

clear.sh

防止冲突,先把一些设置清空

1 | sudo rm /usr/local/etc/openvswitch/* |

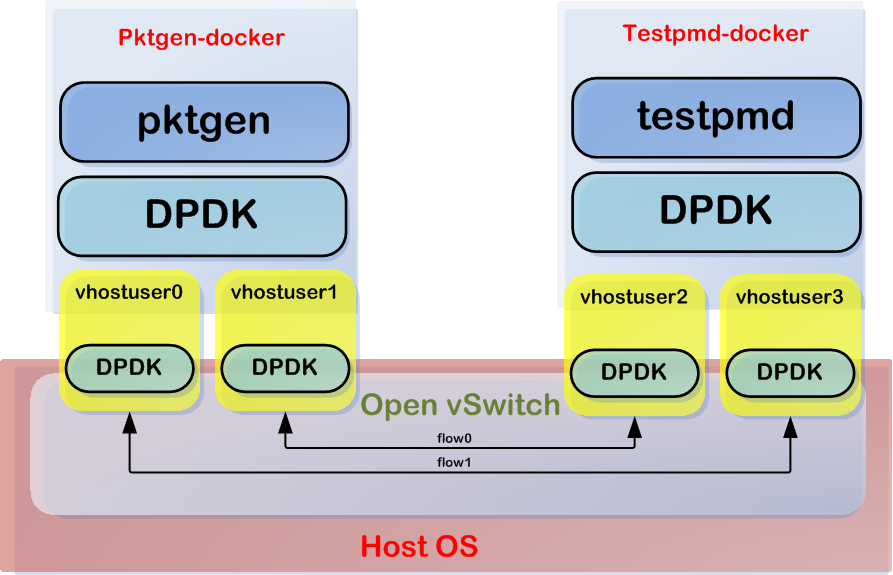

拓扑结构

大致的结构是这样的

dpdk安装

1 | wget http://fast.dpdk.org/rel/dpdk-16.11.1.tar.xz |

Hugepage配置

1 | sudo vim /etc/default/grub |

pktgen安装

1 | 某个版本第一次安装会失败,这个版本不会 |

OvS安装与配置

OvS安装

1 | 创建必要目录 |

启动OvS

1 | 此时在openvswitch-2.6.1目录下 |

其中一些选项的说明如下

- dpdk-init

- Specifies whether OVS should initialize and support DPDK ports. This

field can either be

trueortry. A value oftruewill cause the ovs-vswitchd process to abort on initialization failure. A value oftrywill imply that the ovs-vswitchd process should continue running even if the EAL initialization fails.

- Specifies whether OVS should initialize and support DPDK ports. This

field can either be

- dpdk-lcore-mask

- Specifies the CPU cores on which dpdk lcore threads should be spawned and expects hex string (eg ‘0x123’).

- dpdk-socket-mem

- Comma separated list of memory to pre-allocate from hugepages on specific sockets. If not specified, 1024 MB will be set for each numa node by default.上面的是"1024,1024",是因为机器有两个numa node,如果只有一个就是"1024"

- dpdk-hugepage-dir

- Directory where hugetlbfs is mounted

- vhost-sock-dir

- Option to set the path to the vhost-user unix socket files.

创建OvS port

1 | ovs use core 2 for the PMD |

增加流表

1 | 清理之前可能残存的流表 |

OvS从这里就已经配置好了,接下来建立Docker container

创建testpmd container和pktgen container

1 | testpmd container |

启动Docker

1 | 启动pktgen-docker |

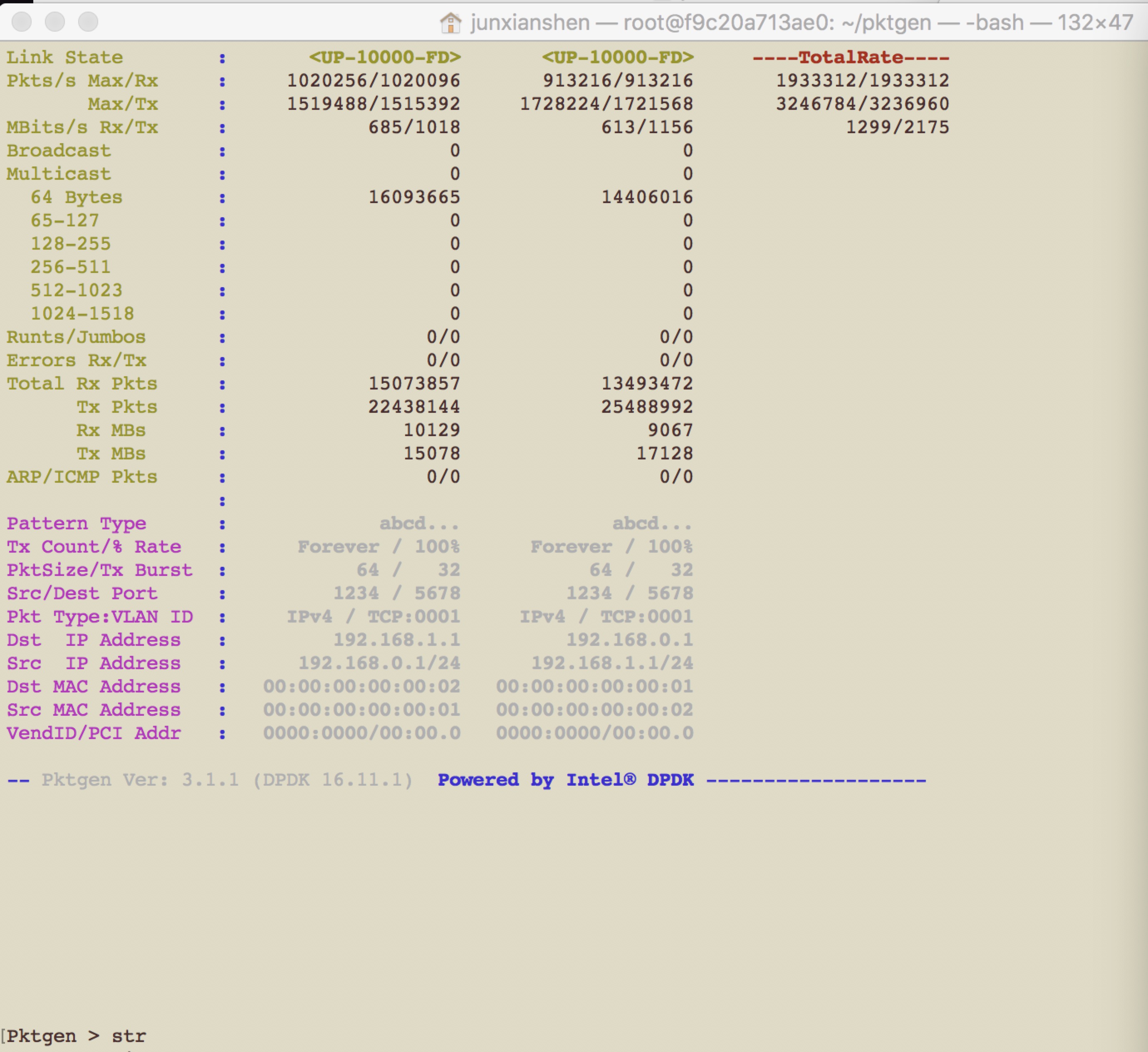

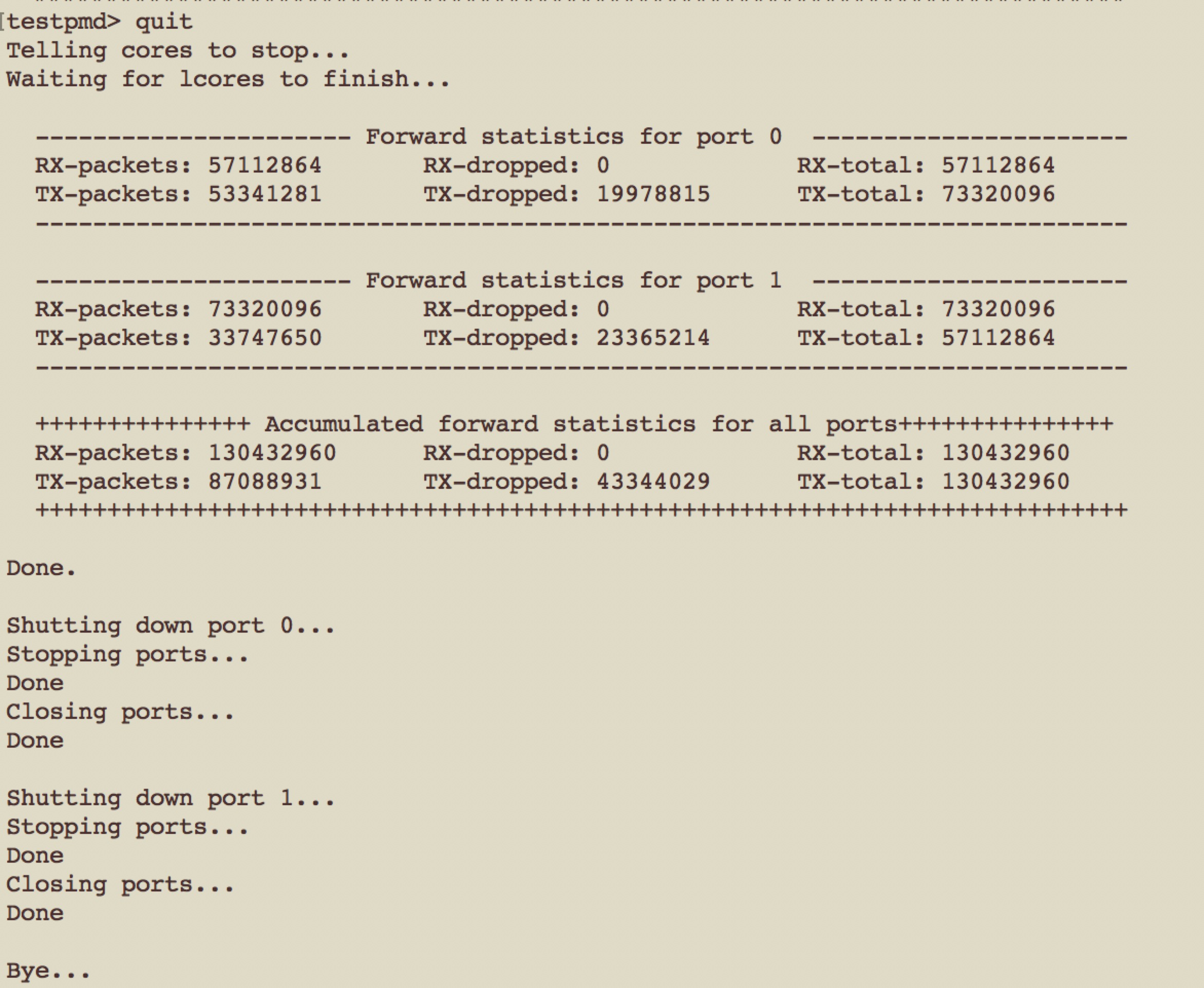

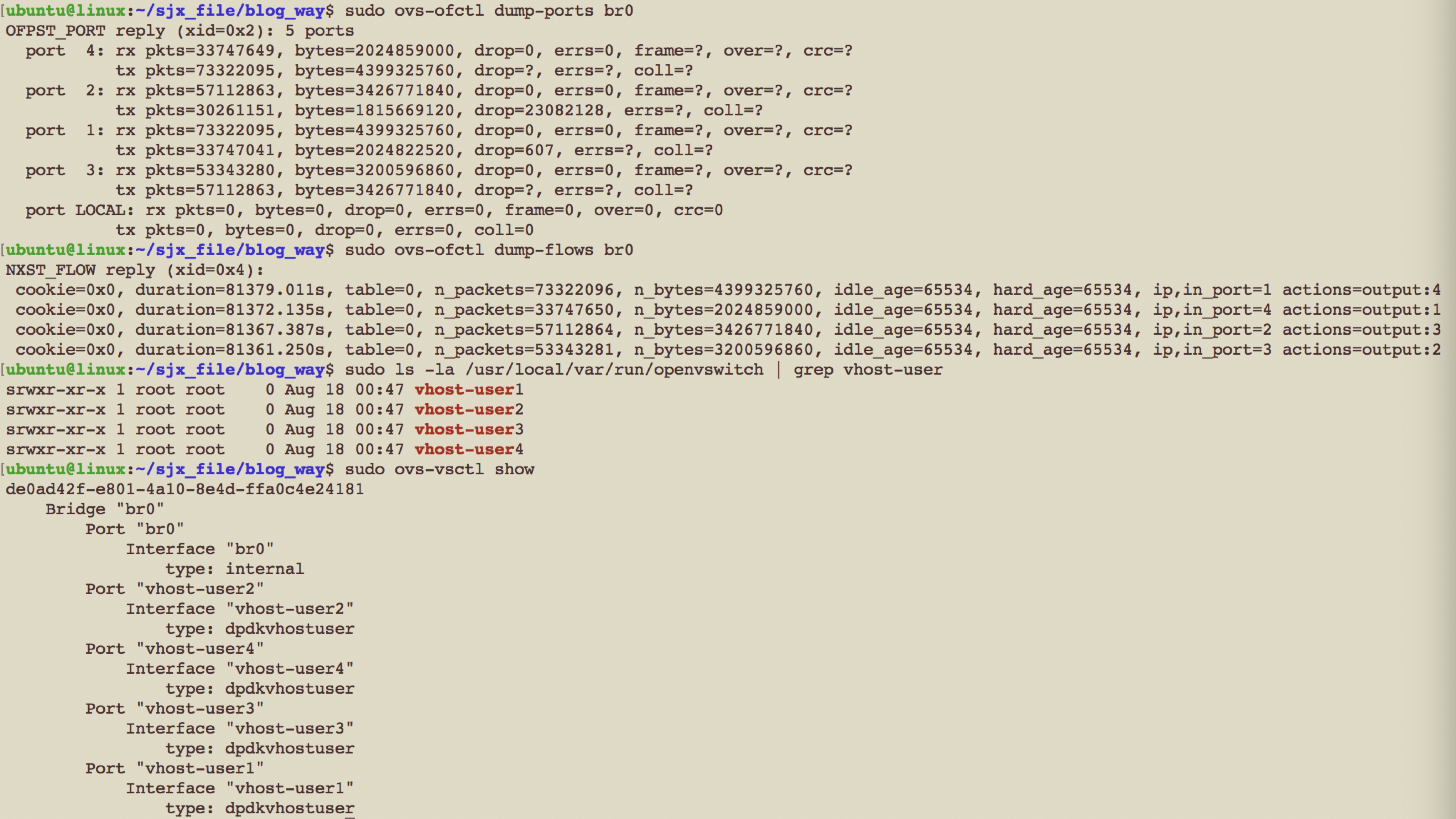

最后结果

pktgen上显示结果

testpmd上显示结果

配置显示结果

踩坑实录

因为不是在虚拟机上跑,没有打2M -singlefile的patch,所以选项改了一下

理论上dpdk的选项

--socket-mem和-m应该都是可以的,然而在我mac上的vagrant开起来的虚拟机上,--socket-mem居然不行。脱离版本的配置十分痛苦。

出现过ovs在add port的时候后报错,事实证明有了log file之后就好调多了

- could not add network device vhost-user0 to ofproto (No such device).猜测可能是某个东西和linux kernel版本不兼容,因为换了内核就没再出现过了。当时的解决方法是先添加,再删除,再添加。然而最后因为没有产生traffic,所以不知道这种方法是否是对的。

- could not allocate memery。大概是这个错误。是因为我开启ovs-vswitchd的时候,设置的socket memory是“1024”,而机器有两个numa node,另外一个就没有memory分配了。

没有traffic的情况

- 有可能是numa的pmd的core分配不对,导致有的socket没有可用的pmd thread

- 有可能是dpdk的-c设置和testpmd或者pktgen的coremask设置不统一,即coremask使用到的内核并不在-c设置的内核中。

参考资料

- https://github.com/intel/SDN-NFV-Hands-on-Samples/tree/master/DPDK_in_Containers_Hands-on_Lab/dpdk-container-lab

- https://www.youtube.com/watch?v=hEmvd7ZjkFw&index=1&list=PLg-UKERBljNx44Q68QfQcYsza-fV0ARbp

- https://blog.csdn.net/me_blue/article/details/78589592

- http://blog.sina.com.cn/s/blog_da4487c40102v2ic.html

- http://docs.openvswitch.org/en/latest/intro/install/dpdk/

- 其他的具体错误都是搜索谷歌解决

- 以及--log-file的问题是咨询学长解决